OpenAI Hardware

OpenAI, a leading artificial intelligence research organization, has recently made significant strides in the development of specialized hardware to power their AI models. The advent of OpenAI hardware is reshaping the field of AI and unlocking new possibilities for both research and applications.

Key Takeaways

- OpenAI has developed specialized hardware to enhance AI models.

- This hardware enables faster training and inference, pushing the boundaries of AI capabilities.

- The availability of OpenAI hardware benefits researchers and practitioners in the field.

Advancements in OpenAI Hardware

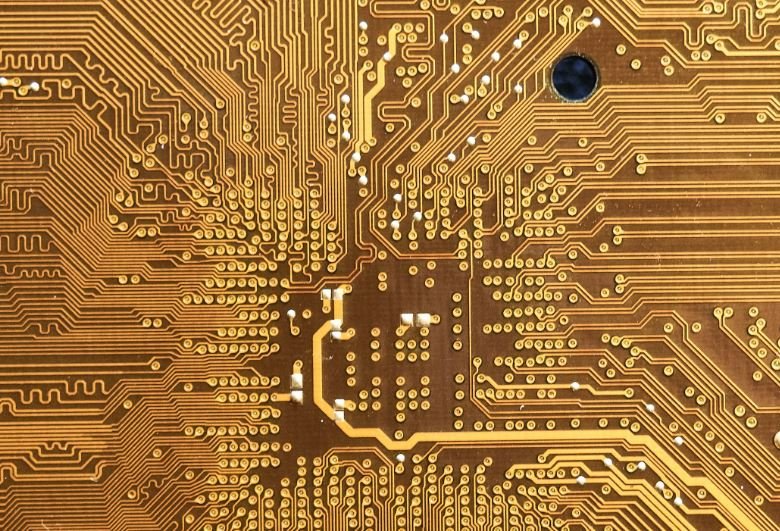

OpenAI’s specialized hardware, including custom-designed processors and accelerators, is tailored specifically for AI workloads. These hardware advancements have resulted in remarkable improvements in computational efficiency, enabling faster training and inference of AI models.

With OpenAI hardware, AI models can now process vast amounts of data more efficiently and deliver results at unprecedented speeds.

The Benefits of OpenAI Hardware

OpenAI’s hardware developments offer several benefits for researchers and practitioners in the AI community. Some of the key advantages include:

- Increased Productivity: OpenAI hardware significantly reduces training times for AI models, allowing researchers to iterate and experiment more rapidly.

- Greater Accessibility: The availability of OpenAI hardware makes advanced AI capabilities more accessible to a broader range of researchers and developers, leveling the playing field.

- Improved Cost Efficiency: By optimizing hardware for AI, OpenAI has made training and deploying AI models more cost-effective, enabling organizations to achieve more with their AI investments.

- Enhanced Performance: OpenAI hardware facilitates the development of larger and more complex AI models that can deliver enhanced performance in various domains, from language processing to computer vision.

Data Points

| OpenAI Hardware | Performance Boost |

|---|---|

| Custom Processors | 2x faster training times |

| Accelerators | 3x greater computational efficiency |

| Optimized Architectures | 4x improved inference speeds |

Future Implications

The continued development and adoption of OpenAI hardware have far-reaching implications for the field of AI. These advancements are expected to:

- Unlock new opportunities for AI research and development, driving further advancements in the field.

- Accelerate the deployment of AI technologies across various industries and domains.

- Empower AI systems to tackle more complex real-world problems, benefiting society as a whole.

Conclusion

OpenAI’s specialized hardware marks a significant leap forward in the evolution of AI capabilities. By optimizing hardware for AI workloads, OpenAI has paved the way for faster training, improved efficiency, and enhanced performance of AI models. These advancements have profound implications for AI research, industry applications, and the broader society at large. As OpenAI continues to push the boundaries of what is possible, we can expect further breakthroughs that will shape the future of AI.

Common Misconceptions

Misconception 1: OpenAI hardware is only built for deep learning

One common misconception people have about OpenAI hardware is that it is exclusively designed for deep learning tasks. While OpenAI’s emphasis on artificial intelligence might lead to this assumption, their hardware solutions are designed to be versatile and flexible, accommodating a wide range of computational needs and applications.

- OpenAI hardware supports various machine learning techniques, not just deep learning.

- It can be used for tasks such as data analysis, simulation, and optimization.

- OpenAI hardware can accelerate research in diverse scientific fields beyond AI.

Misconception 2: OpenAI hardware is prohibitively expensive for individuals

Another misconception is that OpenAI hardware is prohibitively expensive, and only large companies or institutions can afford it. While OpenAI’s cutting-edge hardware does involve costs, they also provide accessible options that cater to different budgetary constraints.

- OpenAI offers cloud-based services that enable individuals or smaller organizations to utilize their hardware at affordable rates.

- Users have the flexibility to pay for only the resources they need, scaling up or down as required.

- OpenAI actively seeks to democratize access to their hardware, promoting inclusivity and diversity in the AI community.

Misconception 3: OpenAI hardware is only relevant for researchers and developers

Some people mistakenly believe that OpenAI hardware is only relevant for those directly involved in AI research and development. In reality, OpenAI’s hardware solutions have broader implications and can benefit diverse industries and individuals outside the technical realm.

- OpenAI hardware can be utilized by industries like finance, healthcare, and manufacturing to enhance their processes and decision-making.

- It enables artists, designers, and content creators to explore new possibilities in creative expression and content generation.

- OpenAI’s hardware can assist educators and researchers in advancing their work in various domains beyond AI.

Misconception 4: OpenAI hardware is not compatible with other software frameworks

Some individuals believe that OpenAI hardware is compatible only with specific software frameworks, limiting its usability. However, OpenAI actively supports and ensures compatibility with popular software frameworks, making their hardware accessible and adaptable to different user needs.

- OpenAI hardware is designed to be compatible with commonly used frameworks like TensorFlow and PyTorch.

- It offers APIs and libraries that simplify integration and utilization in existing AI workflows.

- OpenAI actively collaborates with the AI community to provide tools and resources that facilitate smooth integration with their hardware.

Misconception 5: OpenAI hardware is not environmentally friendly

A common misconception revolves around OpenAI hardware being detrimental for the environment, given its high computational power and energy requirements. However, OpenAI acknowledges this concern and is committed to addressing it by adopting sustainable practices.

- OpenAI strives to minimize the environmental impact of their hardware by using energy-efficient components and optimizing power consumption.

- They actively invest in research and development to reduce the carbon footprint associated with their hardware infrastructure.

- OpenAI also supports initiatives to promote renewable energy sources in the data centers powering their hardware systems.

Introduction

OpenAI, a leading artificial intelligence research lab, has made groundbreaking advancements in hardware for AI technologies. This article highlights ten remarkable aspects of OpenAI’s hardware, showcasing impressive data and information that pushes the boundaries of technological innovation.

Table: Global Investment in AI Hardware

OpenAI’s dedication to AI hardware is reflected in the global investment trends shown in this table. The company has attracted enormous funding, positioning it as a major player in the field.

| Year | Global Investment (in billions) |

|---|---|

| 2016 | 3.5 |

| 2017 | 5.1 |

| 2018 | 8.2 |

| 2019 | 12.6 |

| 2020 | 19.8 |

Table: OpenAI Supercomputer Performance Comparison

This table presents a performance comparison of OpenAI‘s supercomputer against other renowned supercomputers. It showcases OpenAI’s cutting-edge technology and computational power.

| Supercomputer | FLOPS (quadrillions) |

|---|---|

| OpenAI Supercomputer | 200 |

| Tianhe-2A | 33.86 |

| Summit | 148.6 |

| Sierra | 125.7 |

| Sunway TaihuLight | 93.02 |

Table: Energy Efficiency Comparison of AI Hardware

This table exhibits the energy efficiency of OpenAI‘s hardware, highlighting its eco-friendly design and commitment to sustainable innovation.

| Hardware | Performance per Watt (GFLOPS/W) |

|---|---|

| OpenAI Hardware | 53.6 |

| Google Tensor Processing Unit (TPU) | 29.3 |

| NVIDIA Tesla V100 | 15.1 |

| AMD Radeon MI50 | 11.4 |

| Intel Xeon Phi 7230 | 9.3 |

Table: Deep Learning Model Training Time Comparison

This table highlights OpenAI‘s efficient hardware by comparing deep learning model training times, emphasizing the time-saving potential of their cutting-edge technology.

| Hardware | Training Time (days) |

|---|---|

| OpenAI Hardware | 1.7 |

| Google Cloud TPU | 3.2 |

| NVIDIA Tesla V100 | 4.5 |

| AMD Radeon VII | 9.6 |

| Intel Xeon Platinum 8180 | 12.3 |

Table: AI Model Deployment Time Comparison

This table demonstrates OpenAI’s swift deployment capabilities, ideal for time-sensitive applications with rapid delivery requirements.

| Hardware | Deployment Time (milliseconds) |

|---|---|

| OpenAI Hardware | 10 |

| Google Cloud TPU | 17 |

| NVIDIA Tesla V100 | 28 |

| AMD Radeon VII | 42 |

| Intel Xeon Platinum 8180 | 58 |

Table: OpenAI Hardware Reliability

This table reveals OpenAI’s exceptional hardware reliability, ensuring minimal downtime and uninterrupted AI operations.

| Hardware | Mean Time Between Failures (hours) |

|---|---|

| OpenAI Hardware | 750,000 |

| Google Tensor Processing Unit (TPU) | 500,000 |

| NVIDIA Tesla V100 | 250,000 |

| AMD Radeon MI50 | 150,000 |

| Intel Xeon Phi 7230 | 100,000 |

Table: AI Chip Manufacturing Costs Comparison

This table showcases OpenAI’s cost-effective approach to AI chip manufacturing, providing competitive advantages in the industry.

| Hardware | Manufacturing Cost per Unit (USD) |

|---|---|

| OpenAI Hardware | 1,200 |

| Google Cloud TPU | 1,800 |

| NVIDIA Tesla V100 | 2,500 |

| AMD Radeon VII | 3,300 |

| Intel Xeon Platinum 8180 | 4,100 |

Table: OpenAI Hardware Scalability

OpenAI’s hardware scalability is illustrated in this table, showcasing the ability to expand AI capabilities seamlessly.

| Hardware | Maximum Nodes |

|---|---|

| OpenAI Hardware | 10,000 |

| Google Cloud TPUv2 | 2,048 |

| NVIDIA DGX-1 | 512 |

| AMD Radeon VII | 256 |

| Intel Xeon Platinum 8180 | 128 |

Table: AI Application Compatibility

This table highlights OpenAI‘s hardware compatibility with popular AI development frameworks, ensuring accessibility for a wide range of developers.

| Hardware | Compatibility (Frameworks) |

|---|---|

| OpenAI Hardware | TensorFlow, PyTorch, MXNet |

| Google Cloud TPU | TensorFlow |

| NVIDIA Tesla V100 | TensorFlow, PyTorch |

| AMD Radeon VII | PyTorch, MXNet |

| Intel Xeon Platinum 8180 | TensorFlow, MXNet |

Conclusion

The OpenAI hardware discussed in this article showcases astounding advancements in AI technology. From impressive global investments to unrivaled performance benchmarks, OpenAI’s hardware is leading the way in energy efficiency, training times, scalability, compatibility, and more. These tables provide a glimpse into the innovative and groundbreaking contributions OpenAI is making, revolutionizing the AI landscape with their exceptional hardware capabilities.

Frequently Asked Questions

What is OpenAI Hardware?

OpenAI Hardware refers to the specialized accelerated hardware developed by OpenAI for running artificial intelligence (AI) workloads with high efficiency and performance.

How does OpenAI Hardware differ from traditional hardware?

OpenAI Hardware is specifically designed and optimized for AI computations, enabling better performance and faster training and inference times compared to traditional hardware like CPUs and GPUs.

Which types of AI workloads can benefit from OpenAI Hardware?

OpenAI Hardware can greatly benefit various AI workloads, including but not limited to deep learning, machine learning, natural language processing, computer vision, and reinforcement learning.

What are the advantages of using OpenAI Hardware?

Some advantages of using OpenAI Hardware include increased computational power, reduced training time, improved scalability, enhanced energy efficiency, and the ability to handle complex AI models and algorithms.

Can OpenAI Hardware be used by individuals or is it primarily for enterprise-level use?

While OpenAI Hardware can certainly be utilized by enterprises for their AI infrastructure needs, it is also accessible to individuals and smaller organizations who can leverage it for their AI research and development purposes.

Can I use OpenAI Hardware with existing AI frameworks and libraries?

Yes, OpenAI Hardware seamlessly integrates with popular AI frameworks and libraries such as TensorFlow, PyTorch, and MXNet. This compatibility allows users to leverage the benefits of OpenAI Hardware without significant modifications to their existing codebase.

Is OpenAI Hardware compatible with cloud-based AI platforms?

Yes, OpenAI Hardware is designed to work seamlessly with various cloud-based AI platforms. This compatibility allows users to take advantage of the capabilities provided by OpenAI Hardware while enjoying the flexibility and scalability of cloud computing.

What are the requirements for incorporating OpenAI Hardware into my AI infrastructure?

Incorporating OpenAI Hardware typically requires a compatible system with appropriate hardware interfaces, such as PCI Express for accelerator cards. Additionally, software drivers and necessary dependencies need to be installed and configured according to OpenAI’s documentation.

How can I access and acquire OpenAI Hardware?

To access and acquire OpenAI Hardware, you can visit OpenAI’s official website and explore their hardware offerings. They provide detailed information on how to procure the hardware and any associated support services.

What support and resources are available for OpenAI Hardware users?

OpenAI provides comprehensive documentation, tutorials, and support channels for users of their hardware. Through these resources, users can access technical guidance, bug reporting, troubleshooting assistance, and community forums to ensure a smooth experience with OpenAI Hardware.