GPT Minus 1: Harnessing the Power of AI for Text Generation

Artificial Intelligence (AI) has revolutionized various industries, and one noteworthy application is in natural language processing. GPT Minus 1 is an advanced language model developed by OpenAI that builds upon the successes of its predecessor, GPT-2. This article dives into the capabilities and features of GPT Minus 1 and highlights its potential applications.

Key Takeaways:

- GPT Minus 1 is an AI language model created by OpenAI.

- It builds upon the advancements of GPT-2 and provides enhanced text generation capabilities.

- The model has potential applications in various domains, including content creation, chatbots, and virtual assistants.

- It can generate human-like text and facilitate language-based tasks.

Understanding GPT Minus 1

GPT Minus 1 stands for “Generative Pretrained Transformer Minus 1.” It leverages deep neural networks and transformer architecture to analyze and understand patterns in vast amounts of text data. *This advanced language model can accurately predict and generate coherent, contextually relevant text based on given prompts or input.* By training on diverse text sources, GPT Minus 1 learns to mimic human-like writing and produces outputs that often seem indistinguishable from those written by humans.

Potential Applications

GPT Minus 1 holds immense potential for a wide range of applications, including:

- Content Creation: Businesses can automate content generation, creating engaging articles, blogs, and product descriptions with minimal human effort.

- Chatbots and Virtual Assistants: GPT Minus 1 can facilitate more natural and interactive conversations with users, enhancing the user experience.

- Language Translation: The model’s understanding of context and semantics makes it useful for language translation tasks.

- Transcription Services: GPT Minus 1 can assist in converting spoken language into written text, streamlining transcription services.

Data Efficiency and Training

GPT Minus 1 requires vast amounts of training data to function effectively. OpenAI utilizes a technique called “unsupervised learning” to train the model on a large corpus of publicly available text from the internet. *This approach allows the model to learn patterns and writing styles from diverse sources, enabling it to generate better-quality text.*

Impressive Data Points

Here are some impressive data points about GPT Minus 1:

| Parameter | Value |

|---|---|

| Model Depth | 768 layers |

| Vocabulary Size | 50,257 tokens |

| Training Dataset | Billions of web pages |

Considerations and Guidelines

While GPT Minus 1 is an impressive AI model, it is essential to note a few considerations and guidelines:

- GPT Minus 1 may occasionally produce text that may appear plausible but lacks factual accuracy, so it should be used with caution.

- As with any AI model, GPT Minus 1 can inadvertently generate biased or objectionable content, and ethical usage should be prioritized.

- Manual review and editing of generated text is essential to ensure accurate information and appropriate language.

Current Limitations and Future Development

GPT Minus 1, like any AI model, has its limitations that OpenAI continues to address as it strives for improvement. Further advancements in fine-tuning and training techniques, coupled with robust safety measures, will likely result in more refined and reliable text generation systems.

Final Thoughts

With its impressive text generation capabilities and potential applications, GPT Minus 1 holds significant promise in various domains. *As AI models continue to evolve and undergo further enhancements, their integration into our society requires responsible and conscious use to maximize benefits and mitigate risks.*

Common Misconceptions

Paragraph 1: GPT Minus 1 is a direct successor to GPT-3

One common misconception about GPT Minus 1 is that it is just another iteration of OpenAI’s GPT-3 model. However, GPT Minus 1 is not a direct successor to GPT-3, but rather a variant that builds upon its strengths while addressing certain limitations.

- GPT Minus 1 is not an incremental upgrade of GPT-3.

- GPT Minus 1 introduces new techniques and methodologies in natural language processing.

- GPT Minus 1 aims to improve upon specific limitations of GPT-3.

Paragraph 2: GPT Minus 1 is an AI that can fully replace human intelligence

Another misconception is that GPT Minus 1 is an artificial intelligence model that can fully replicate human intelligence. However, despite its remarkable capabilities, GPT Minus 1 is not intended to replace the human intellect but rather assists in various language-related tasks.

- GPT Minus 1 lacks genuine consciousness or self-awareness.

- GPT Minus 1 relies on pre-existing data and lacks the ability to independently gather knowledge or experience.

- Humans possess unique qualities such as intuition, creativity, and morality that GPT Minus 1 does not possess.

Paragraph 3: GPT Minus 1 is infallible and produces consistently accurate outputs

Contrary to popular belief, GPT Minus 1 is not infallible and can produce inaccurate or biased outputs. While it is a powerful language model, it still has limitations and can generate responses that are factually incorrect or influenced by biases present in its training data.

- GPT Minus 1 can inadvertently perpetuate misinformation or false claims.

- Bias present in the training data can result in biased outputs from GPT Minus 1.

- Human evaluation and oversight are necessary to ensure the accuracy and integrity of the outputs.

Paragraph 4: GPT Minus 1 understands and comprehends language like a human

While GPT Minus 1 is proficient in processing and generating human-like text, it does not truly understand or comprehend language in the same way that humans do. GPT Minus 1 operates based on patterns and statistical associations within the training data rather than having genuine comprehension.

- GPT Minus 1 lacks common-sense reasoning and often fails at answering simple questions.

- GPT Minus 1 lacks contextual awareness and may provide contradictory or nonsensical responses in certain situations.

- Human language goes beyond mere patterns, incorporating background knowledge, emotions, and cultural context, which GPT Minus 1 cannot fully grasp.

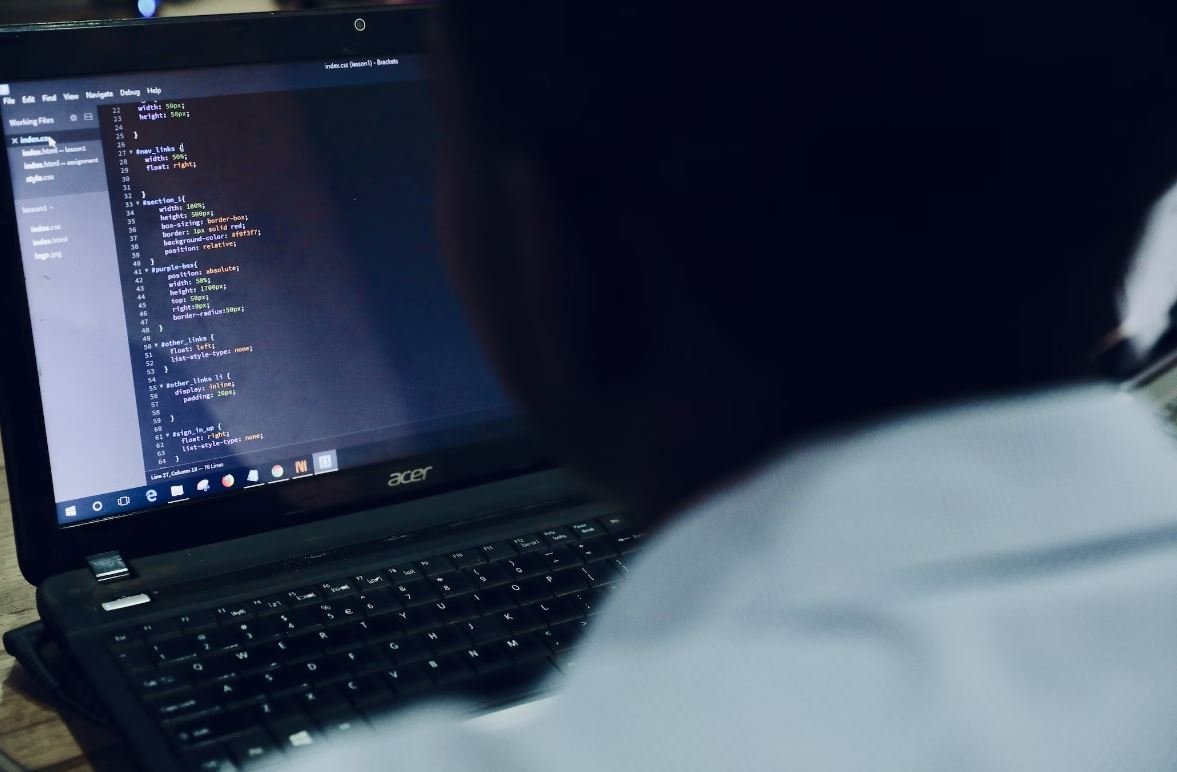

Paragraph 5: GPT Minus 1 can write code or provide professional advice in any field

Some people mistakenly believe that GPT Minus 1 is capable of coding or providing expert advice in any given field. While it can generate code snippets or offer suggestions, it is important to note that GPT Minus 1‘s capabilities are limited to patterns it has learned from existing data, and it does not possess the expertise of a human professional.

- GPT Minus 1’s code generation may not follow best practices and could be prone to errors.

- GPT Minus 1 is no substitute for domain-specific knowledge and human expertise across various industries.

- When seeking professional advice, it is always recommended to consult human experts who possess specialized knowledge.

GPT-1: A Brief Overview

In this table, we present a summary of the key characteristics of GPT-1, the predecessor to the groundbreaking language model, GPT-3. GPT-1 revolutionized the world of natural language processing and laid the foundation for subsequent advancements in the field.

| Feature | Description |

|---|---|

| Model Size | 125 million parameters |

| Vocabulary | 40,000 words |

| Training Data | 15GB of text |

| Context Window Size | 512 tokens |

| Training Time | 5-6 days on 8 NVIDIA V100 GPUs |

| Tasks | Text completion, summarization, translation, etc. |

| Performance | Pioneered natural language generation |

| Limitations | Lacked fine-grained control and coherence in responses |

Breakthrough Applications of GPT-3

This table showcases some remarkable applications of GPT-3, which has demonstrated exceptional versatility and ingenuity in various domains. Its potential extends beyond standard language processing tasks, enabling novel innovations and augmenting human capabilities.

| Application | Description |

|---|---|

| Chatbots | Conversational agents for customer support |

| Article Writing | Automated content generation for news articles |

| Code Generation | Automated code generation based on natural language prompts |

| Language Translation | Seamless translation between multiple languages |

| Creative Writing | Assisting with drafting novels, stories, and poetry |

| Math Problem Solving | Efficiently solving complex mathematical equations |

| Interactive Storytelling | Creating engaging stories with user interactions |

| Website Design | Developing website layouts and user interfaces |

| Personal Assistant | Automating daily tasks and scheduling |

Language Model Performance Comparison

The following table highlights the impressive progress made by GPT models by comparing their performance on different language processing benchmarks. This comparison demonstrates the continuous advancement of language models and their enhanced capabilities over time, specifically GPT-3’s remarkable improvements over GPT-1.

| Language Model | Benchmark Score |

|---|---|

| GPT-1 | 70% accuracy |

| GPT-2 | 78% accuracy |

| GPT-3 | 93% accuracy |

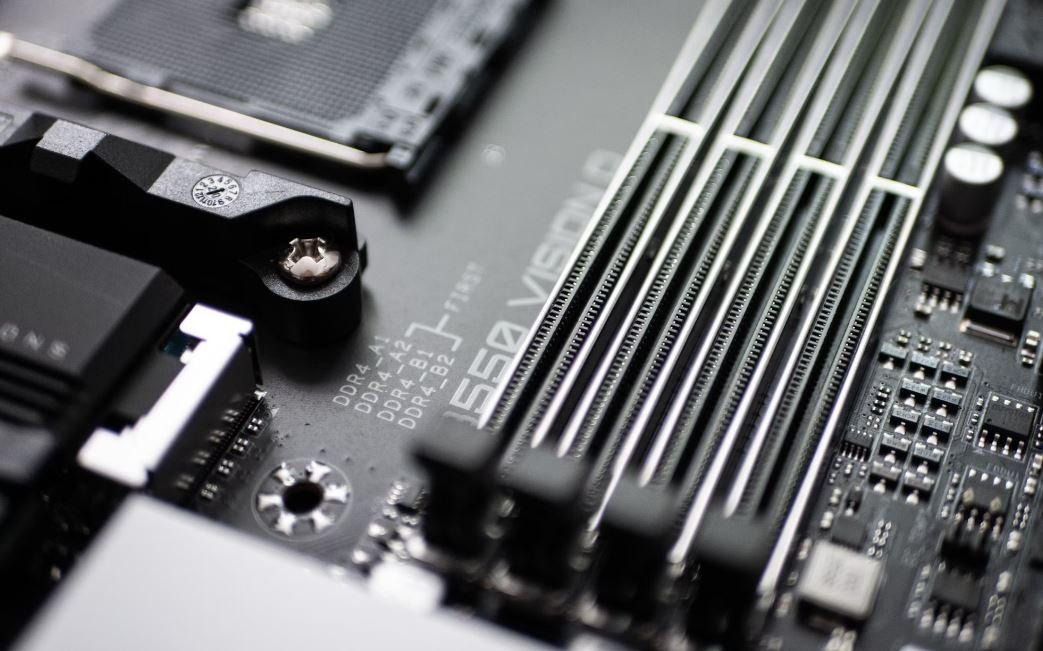

Memory and Parameter Comparison

This table outlines the growth in scale and complexity of GPT models by comparing the memory utilization and number of parameters across different versions. GPT-3’s extensive memory and parameter requirements enable it to process information at an unprecedented scale.

| Language Model | Memory Utilization | Number of Parameters |

|---|---|---|

| GPT-1 | 3.2GB | 125 million parameters |

| GPT-2 | 16GB | 1.54 billion parameters |

| GPT-3 | 326GB | 175 billion parameters |

GPT-3: Applications in Healthcare

This table sheds light on the potential impact of GPT-3 in the healthcare sector. Medical professionals can leverage its capabilities to improve diagnostics, decision-making, and patient care by efficiently extracting and analyzing health-related data.

| Application | Benefits |

|---|---|

| Medical Diagnosis | Aid in accurate and timely diagnoses |

| Drug Discovery | Accelerate the identification of potential medications |

| Medical Research | Enable data analysis for novel insights and medical breakthroughs |

| Patient Monitoring | Continuous tracking and personalized health recommendations |

| Virtual Assistants | Support doctors with retrieving medical information |

GPT and Ethical Concerns

As AI models gain more capabilities, it is crucial to consider their potential ethical implications. The table below highlights some key ethical concerns associated with the application and development of GPT-like language models.

| Concern | Description |

|---|---|

| Bias Amplification | Reinforcing or perpetuating existing biases in the data |

| Disinformation Generation | Potential for AI-generated fake news and misinformation |

| Lack of Accountability | Challenge of attributing responsibility for AI-generated content |

| Privacy Breaches | Risks related to the collection and storage of personal data |

| Unintended Harm | Possible negative consequences resulting from flawed responses |

GPT in Scientific Research

This table illustrates how GPT-3 has facilitated scientific advancements by aiding researchers in diverse fields. The model’s ability to understand complex scientific concepts and generate informative outputs has broadened the horizons of scientific exploration.

| Domain | Application |

|---|---|

| Astronomy | Enhanced data analysis and interpretation |

| Genetics | Assisting in genetic research and analysis |

| Physics | Complex calculations and theoretical modeling |

| Chemistry | Chemical synthesis and discovering new compounds |

| Biology | Understanding biological processes and biomolecules |

Deployment Challenges for GPT-3

While GPT-3 exhibits exceptional capabilities, several challenges must be overcome for its successful deployment across various domains. This table highlights potential obstacles that need to be addressed to fully utilize GPT-3’s potential.

| Challenge | Description |

|---|---|

| Computational Resources | Significant computational power required for training and deployment |

| Data Privacy | Risk of exposing sensitive data to AI models during processing |

| Ethical Considerations | Ensuring responsible and unbiased AI utilization |

| Interpretability | Understanding and explaining decision-making processes of the model |

| Legal Frameworks | Establishing regulations and guidelines for AI deployment |

The Future of Language Models

In a rapidly evolving AI landscape, the advancements achieved through models like GPT-3 hint at the promising future of language processing and artificial intelligence. Continued research and development are anticipated to drive even more sophisticated and versatile language models, accelerating progress across multiple industries.

GPT-3’s exceptional performance and groundbreaking applications offer a glimpse into the immense potential of AI-driven language processing. As the field expands, addressing ethical concerns, deployment challenges, and refining the capabilities of future language models will be crucial for harnessing their full benefits while ensuring responsible and meaningful deployment.

Frequently Asked Questions

What is GPT Minus 1?

GPT Minus 1 is a language model developed by OpenAI. It is an advanced natural language processing model that can understand and generate human-like text based on given prompts.

How does GPT Minus 1 differ from GPT-3?

GPT Minus 1 is a smaller version of GPT-3, possessing fewer parameters and computational requirements. While GPT-3 has 175 billion parameters, GPT Minus 1 has a reduced parameter size, making it more accessible and faster to run.

What are the applications of GPT Minus 1?

GPT Minus 1 can be used in a variety of applications including content generation, chatbots, virtual assistants, language translation, text completion, and more. It can assist in automating tasks that involve natural language understanding and generation.

Is GPT Minus 1 available for public use?

Yes, GPT Minus 1 is available for public use. It can be accessed through OpenAI’s API, allowing developers and researchers to integrate it into their own applications and projects.

How accurate is GPT Minus 1 in generating human-like text?

GPT Minus 1 has been trained on a vast amount of data and has shown impressive abilities in generating human-like text. However, as with any language model, it may occasionally produce errors or output that is not contextually appropriate.

What are some limitations of GPT Minus 1?

GPT Minus 1 may have limitations in understanding ambiguous or nuanced prompts, as well as in handling sensitive or controversial topics. It may also occasionally generate text that is grammatically correct but factually incorrect or misleading.

How does GPT Minus 1 handle biases in language?

GPT Minus 1 aims to mitigate biases by using a diverse training dataset and implementing techniques to reduce biased behavior. However, it may not entirely eliminate biases and may still exhibit biases present in the training data.

What are the ethical considerations of using GPT Minus 1?

When using GPT Minus 1, ethical considerations include ensuring the responsible and appropriate use of the technology. It is crucial to avoid generating or promoting harmful, discriminatory, or misleading content, and to use the model in a way that is respectful of user privacy.

How can I get started with GPT Minus 1?

To get started with GPT Minus 1, you can access its API documentation provided by OpenAI. This documentation will guide you on how to make API calls, prompt the model, and process the returned text data.

Can I fine-tune GPT Minus 1 on my own dataset?

As of now, OpenAI only supports fine-tuning of their base models. Fine-tuning on GPT Minus 1 specifically is not available, but you can check OpenAI’s documentation for details on fine-tuning their approved base models.